Summary

Network Configuration management is many times overlooked. Better yet, companies with strong Change Management practices believe they do not need config management because of this.

The issue is that sometimes commands entered to network gear do not take effect as we expect or we want to compare history and easily diff changes for root cause analysis.

Rancid

Rancid is a free open source tool to handle just this. I have successfully used it for the past few years. It has been a great tool and caught a typo from time to time as well as unexpected application of commands.

At a high level, the way it works is to pull a full config each time and push it into a version control system like CVS or Subversion. Git is also a popular choice but not really necessary as we will not be branching.

Once the configs are pumped into a versioning system, it is easy to produce diffs and any time rancid runs, it outputs the diffs so you can see the change.

The initial setup of rancid is often a barrier to entry. Once you get it setup the first time, upgrades are fairly simple.

Installing

For this demo, we are using a VM. We installed a minimal install CentOS 8.0 on a 1GB RAM, 10GB HDD with 1 CPU core. Production specs are not much more than this depending on how many devices you are querying and how often.

Let’s download the tar first!

[root@rancid ~]# curl -O https://shrubbery.net/pub/rancid/rancid-3.10.tar.gzWe need to install some dependencies! Expect is the brains of rancid and used to send and receive data from the network devices. Many of the modules that manipulate the data received are perl. Gcc and make are used to build the source code.

We need some sort of mailer, hence sendmail. You can use postfix if you prefer that.

We will be using CVS for simplicity and the default configuration of rancid.

[root@rancid rancid-3.10]# yum install expect perl gcc make sendmail

[root@rancid rancid-3.10]# yum install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm

[root@rancid rancid-3.10]# yum install cvsWe then need to extract and build the source!

[root@rancid ~]# tar xzf rancid-3.10.tar.gz

[root@rancid ~]# ls -la | grep rancid

drwxr-xr-x. 8 7053 wheel 4096 Sep 30 18:15 rancid-3.10

-rw-r--r--. 1 root root 533821 Nov 5 06:13 rancid-3.10.tar.gz

[root@rancid ~]# cd rancid-3.10

[root@rancid ~]# ./configure

checking for a BSD-compatible install... /usr/bin/install -c

checking whether build environment is sane... yes

checking for a thread-safe mkdir -p... /usr/bin/mkdir -p

checking for gawk... gawk

.....

config.status: creating include/config.h

config.status: executing depfiles commands

[root@rancid rancid-3.10]# make

... tons of output

gmake[1]: Leaving directory '/root/rancid-3.10/share'

[root@rancid rancid-3.10]# make install

[root@rancid rancid-3.10]# ls -la /usr/local/rancid/

total 4

drwxr-xr-x. 7 root root 63 Nov 5 06:21 .

drwxr-xr-x. 13 root root 145 Nov 5 06:20 ..

drwxr-xr-x. 2 root root 4096 Nov 5 06:21 bin

drwxr-xr-x. 2 root root 90 Nov 5 06:21 etc

drwxr-xr-x. 3 root root 20 Nov 5 06:21 lib

drwxr-xr-x. 4 root root 31 Nov 5 06:21 share

drwxr-xr-x. 2 root root 6 Nov 5 06:20 varWe very likely do not want this to run as root so we will need to create a user. By default, rancid gets installed to /usr/local/rancid so we will set that to the user’s home directory

[root@rancid rancid-3.10]# useradd -d /usr/local/rancid -M -U rancid

[root@rancid rancid-3.10]# chown rancid:rancid /usr/local/rancid/

[root@rancid rancid-3.10]# chown -R rancid:rancid /usr/local/rancid/*

[root@rancid rancid-3.10]# su - rancid

[rancid@rancid ~]$ pwd

/usr/local/rancidTo preserve permissions, all further changes should be made under the rancid user.

Configuring Rancid

The global rancid configuration, rancid.conf is dictated by the following format – https://www.shrubbery.net/rancid/man/rancid.conf.5.html

We will need to modify the following line

# list of rancid groups

LIST_OF_GROUPS="networking"Configuring Devices

cloginrc

This follows a specific format as described here – https://www.shrubbery.net/rancid/man/cloginrc.5.html

[rancid@rancid ~]$ cat .cloginrc

add user test-f5 root

add password test-f5 XXXXXXX

router.db

This follows a specific format as described here – https://www.shrubbery.net/rancid/man/router.db.5.html

For our example we put in the following line. Please keep in mind you can use any name you wish but it has to either resolve via DNS or hosts file

[rancid@rancid var]$ cat router.db

test-f5;bigip;upFirst Run

[rancid@rancid ~]$ bin/rancid-run

[rancid@rancid ~]$Well that was anticlimactic. Rancid typically doesn’t output at the console and reserves that for the logs in ~/var/logs

[rancid@rancid logs]$ pwd

/usr/local/rancid/var/logs

[rancid@rancid logs]$ ls -altrh

total 4.0K

drwxr-xr-x. 3 rancid rancid 35 Nov 5 07:00 ..

-rw-r-----. 1 rancid rancid 270 Nov 5 07:00 networking.20191105.070023

drwxr-x---. 2 rancid rancid 40 Nov 5 07:00 .

[rancid@rancid logs]$ cat networking.20191105.070023

starting: Tue Nov 5 07:00:23 CST 2019

/usr/local/rancid/var/networking does not exist.

Run bin/rancid-cvs networking to make all of the needed directories.

ending: Tue Nov 5 07:00:23 CST 2019

[rancid@rancid logs]$ Ok, let’s run rancid-cvs. Its nice that it will create the repos for you. It both versions the router configs and the router.db files

[rancid@rancid ~]$ ~/bin/rancid-cvs

No conflicts created by this import

cvs checkout: Updating networking

Directory /usr/local/rancid/var/CVS/networking/configs added to the repository

cvs commit: Examining configs

cvs add: scheduling file `router.db' for addition

cvs add: use 'cvs commit' to add this file permanently

RCS file: /usr/local/rancid/var/CVS/networking/router.db,v

done

Checking in router.db;

/usr/local/rancid/var/CVS/networking/router.db,v <-- router.db

initial revision: 1.1

done

# Proof of CVS creation

[rancid@rancid ~]$ find ./ -type d -name CVS

./var/CVS

./var/networking/CVS

./var/networking/configs/CVSRancid-run again!

[rancid@rancid ~]$ cd var/logs

[rancid@rancid logs]$ ls -altrh

total 8.0K

-rw-r-----. 1 rancid rancid 270 Nov 5 07:00 networking.20191105.070023

drwxr-xr-x. 5 rancid rancid 64 Nov 5 07:04 ..

drwxr-x---. 2 rancid rancid 74 Nov 5 07:05 .

-rw-r-----. 1 rancid rancid 741 Nov 5 07:05 networking.20191105.070555

[rancid@rancid logs]$ cat networking.20191105.070555

starting: Tue Nov 5 07:05:55 CST 2019

cvs add: scheduling file `.cvsignore' for addition

cvs add: use 'cvs commit' to add this file permanently

cvs add: scheduling file `configs/.cvsignore' for addition

cvs add: use 'cvs commit' to add this file permanently

cvs commit: Examining .

cvs commit: Examining configs

RCS file: /usr/local/rancid/var/CVS/networking/.cvsignore,v

done

Checking in .cvsignore;

/usr/local/rancid/var/CVS/networking/.cvsignore,v <-- .cvsignore

initial revision: 1.1

done

RCS file: /usr/local/rancid/var/CVS/networking/configs/.cvsignore,v

done

Checking in configs/.cvsignore;

/usr/local/rancid/var/CVS/networking/configs/.cvsignore,v <-- .cvsignore

initial revision: 1.1

done

ending: Tue Nov 5 07:05:56 CST 2019The router.db we created in ~/var/router.db needs to move to ~/var/networking/router.db

[rancid@rancid var]$ mv ~/var/router.db ~/var/networking/

[rancid@rancid var]$ ~/bin/rancid-run

[rancid@rancid var]$ cd logs

[rancid@rancid logs]$ ls -la

total 12

drwxr-x---. 2 rancid rancid 108 Nov 5 07:08 .

drwxr-xr-x. 5 rancid rancid 47 Nov 5 07:08 ..

-rw-r-----. 1 rancid rancid 270 Nov 5 07:00 networking.20191105.070023

-rw-r-----. 1 rancid rancid 741 Nov 5 07:05 networking.20191105.070555

-rw-r-----. 1 rancid rancid 1899 Nov 5 07:08 networking.20191105.070840

[rancid@rancid logs]$ cat networking.20191105.070840

starting: Tue Nov 5 07:08:40 CST 2019

/usr/local/rancid/bin/control_rancid: line 433: sendmail: command not found

cvs add: scheduling file `test-f5' for addition

cvs add: use 'cvs commit' to add this file permanently

RCS file: /usr/local/rancid/var/CVS/networking/configs/test-f5,v

done

Checking in test-f5;

/usr/local/rancid/var/CVS/networking/configs/test-f5,v <-- test-f5

initial revision: 1.1

done

Added test-f5

Trying to get all of the configs.

test-f5: missed cmd(s): all commands

test-f5: End of run not found

test-f5 clogin error: Error: /usr/local/rancid/.cloginrc must not be world readable/writable

#

This file does have passwords afterall, let’s lock it down

[rancid@rancid ~]$ chmod 750 .cloginrc

[rancid@rancid ~]$

Iterative Approach

I went through a few iterations of troubleshooting and looking at the logs. I did this because nearly nobody gets the install 100% correct the first time. Therefore, its great to understand how to check the logs and make changes accordingly.

The final cloginrc looks like this

[rancid@rancid ~]$ cat .cloginrc

add user test-f5 root

add password test-f5 XXXXXXXXXX

#defaults for most devices

add autoenable * 1

add method * sshThe rancid.conf needed this line changed

SENDMAIL="/usr/sbin/sendmail"And now we have a clean run!

[rancid@rancid ~]$ cat var/logs/networking.20191105.073209

starting: Tue Nov 5 07:32:09 CST 2019

Trying to get all of the configs.

All routers successfully completed.

cvs diff: Diffing .

cvs diff: Diffing configs

cvs commit: Examining .

cvs commit: Examining configs

ending: Tue Nov 5 07:32:20 CST 2019Scheduled Runs

On UNIX, crontab is the typical default to run scheduled jobs and here is a good one to run. You can edit your crontab by running “crontab -e” or list it by running “crontab -l”

#Run config differ twice daily

02 1,14 * * * /usr/local/rancid/bin/rancid-run

#Clean out config differ logs

58 22 * * * /usr/bin/find /usr/local/rancid/var/logs -type f -mtime +7 -deleteThis crontab runs rancid 2 minutes after the hour at 02:02 and 14:02. It then clears logs older than 7 days every 24 hours at 22:58. We do not want the drive to fill up due to noisy logs.

Web Interface

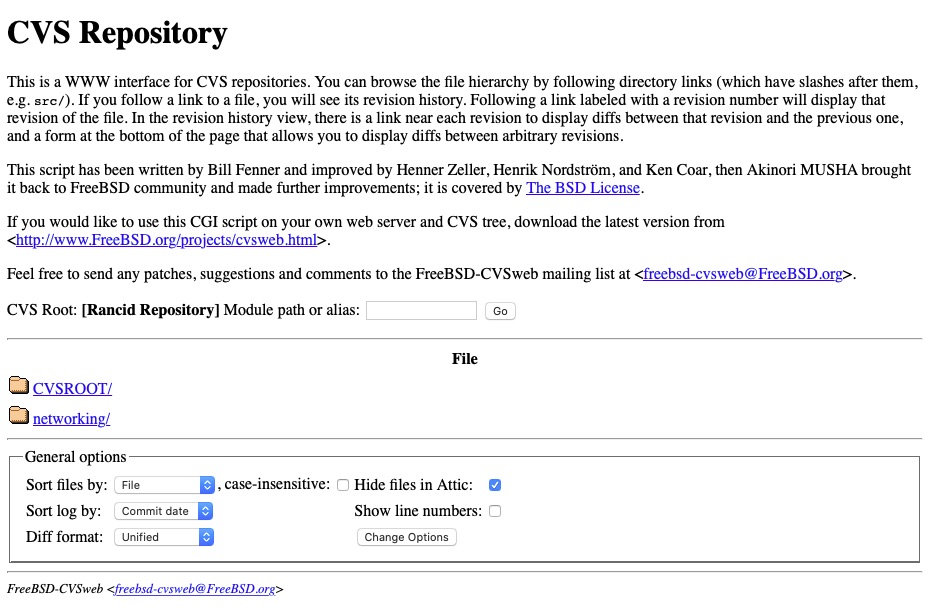

Rancid is nearly 100% CLI but there are addon tools for CVS that we can use. Namely cvsweb. FreeBSD was a heavy user of CVS and created this project/package.

cvsweb will require apache and “rcs”. RCS does not yet exist in EPEL for CentOS 8.0

[root@rancid ~]# yum install httpd

[root@rancid ~]# curl -O https://people.freebsd.org/~scop/cvsweb/cvsweb-3.0.6.tar.gz

[root@rancid ~]# tar xzf cvsweb-3.0.6.tar.gz

[root@rancid cvsweb-3.0.6]# cp cvsweb.cgi /var/www/cgi-bin/

[root@rancid cvsweb-3.0.6]# mkdir /usr/local/etc/cvsweb/

[root@rancid cvsweb-3.0.6]# cp cvsweb.conf /usr/local/etc/cvsweb/

[root@rancid httpd]# chmod 755 /var/www/cgi-bin/cvsweb.cgi We need to tell cvsweb where the repo is! Find the following section to add ‘Rancid’ in /usr/local/etc/cvsweb/cvsweb.conf

@CVSrepositories = (

'Rancid' => ['Rancid Repository', '/usr/local/rancid/var/CVS'],

Now let’s start up apache and let it rip!

[root@rancid cvsweb-3.0.6]# systemctl enable httpd

Created symlink /etc/systemd/system/multi-user.target.wants/httpd.service → /usr/lib/systemd/system/httpd.service.

[root@rancid cvsweb-3.0.6]# systemctl start httpd

# Enable port 80 on firewall

[root@rancid httpd]# firewall-cmd --zone=public --add-service=http --permanent

success

[root@rancid httpd]# firewall-cmd --reload

successWait, it still doesn’t work. Let’s check /var/log/httpd/error_log

Can't locate IPC/Run.pm in @INC (you may need to install the IPC::Run module) (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5) at /var/www/cgi-bin/cvsweb.cgi line 100.

BEGIN failed--compilation aborted at /var/www/cgi-bin/cvsweb.cgi line 100.

[Tue Nov 05 08:02:58.091776 2019] [cgid:error] [pid 20354:tid 140030446114560] [client ::1:37398] End of script output before headers: cvsweb.cgi

On CentOS 8 – It seems the best way to get this is via https://centos.pkgs.org/8/centos-powertools-x86_64/perl-IPC-Run-0.99-1.el8.noarch.rpm.html

[root@rancid httpd]# dnf --enablerepo=PowerTools install perl-IPC-RunThen I ran into the following issue which seems to be a known bug. I manually edited the file as recommended in the patch.

"my" variable $tmp masks earlier declaration in same statement at /var/www/cgi-bin/cvsweb.cgi line 1338.

syntax error at /var/www/cgi-bin/cvsweb.cgi line 1195, near "$v qw(hidecvsroot hidenonreadable)"

Global symbol "$v" requires explicit package name (did you forget to declare "my $v"?) at /var/www/cgi-bin/cvsweb.cgi line 1197.

Global symbol "$v" requires explicit package name (did you forget to declare "my $v"?) at /var/www/cgi-bin/cvsweb.cgi line 1197.

syntax error at /var/www/cgi-bin/cvsweb.cgi line 1276, near "}"

(Might be a runaway multi-line << string starting on line 1267)

syntax error at /var/www/cgi-bin/cvsweb.cgi line 1289, near "}"

syntax error at /var/www/cgi-bin/cvsweb.cgi line 1295, near "}"

syntax error at /var/www/cgi-bin/cvsweb.cgi line 1302, near "}"

syntax error at /var/www/cgi-bin/cvsweb.cgi line 1312, near "}"

syntax error at /var/www/cgi-bin/cvsweb.cgi line 1336, near "}"

syntax error at /var/www/cgi-bin/cvsweb.cgi line 1338, near ""$tmp,v" }"

/var/www/cgi-bin/cvsweb.cgi has too many errors.Are we there yet?

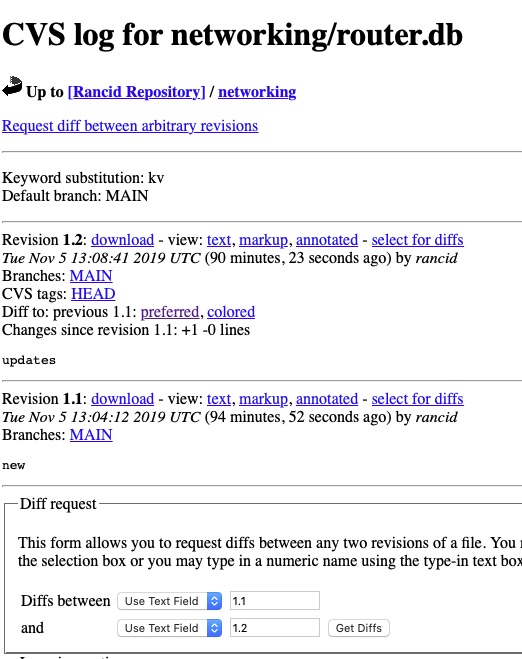

And we can drill into router.db and other areas!

Security

We really should secure this page because 1) We are running perl scripts and cgi-bin is notoriously insecure. For 2) We have router configs, possibly with passwords and passphrases.

[root@rancid ~]# htpasswd -c /etc/httpd/.htpasswd dwchapmanjr

New password:

Re-type new password:

Adding password for user dwchapmanjr

[root@rancid ~]# Create the /var/www/cgi-bin/.htaccess

AuthType Basic

AuthName "Restricted Content"

AuthUserFile /etc/apache2/.htpasswd

Require valid-userSet permissions

[root@rancid html]# chmod 640 /etc/apache2/.htpasswd

[root@rancid html]# chmod 640 /var/www/cgi-bin/.htaccess

[root@rancid html]# chown apache /etc/apache2/.htpasswd

[root@rancid html]# chmod apache /var/www/cgi-bin/.htaccess We then want to Allow overrides so that the .htaccess will actually work by editing /etc/httpd/conf/httpd.conf

# Change Allow Override to All

<Directory "/var/www/cgi-bin">

#AllowOverride None

AllowOverride All

Options None

Require all granted

</Directory>And then “systemctl restart httpd”

With any luck you should get a user/pass prompt now! It is not the most secure but it is something.

Final Words

In this article we have stood up rancid from scratch. We have also gone over some basic troubleshooting steps and configured apache and cvsweb to visually browse the files.