Summary

Yesterday I was playing a bit with Google Load Balancers and they tend to work best when you connect them to an automated instance group. I may touch on that in another article but in short that requires some level of automation. In an instance group, it will attempt to spin up images automatically. Based on health checks, it will introduce them to the load balanced cluster.

The Problem?

How do we automate provisioning? I have been touching on SaltStack in a few articles . Salt is great for configuration management but in an automated fashion, how do you get Salt on there? This was my goal. To get Salt Installed on a newly provisioned VM.

Method

Cloud-init is a very widely known method of provisioning a machine. From my brief understanding it started with Ubuntu and then took off. In Spinning Up Rancher With Kubernetes, I was briefly exposed to it. It makes sense and is widely supported. The concept it simple. Have a one time provisioning of the server.

Google Compute Engine

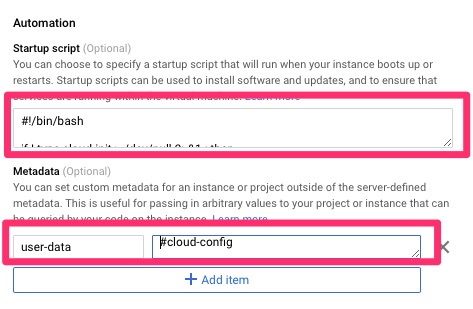

Google Compute Engine or GCE does support pushing cloud-init configuration (cloud-config) using metadata. You can set the “user-data” field and if cloud-init is installed it will be able to find this.

The problem is the only image that seems to support this out of the box is Ubuntu and my current preferred platform is CentOS although this is starting to change.

Startup Scripts

So if we don’t have cloud-init, what can we do? Google does have the functionality for startup and shutdown scripts via “startup-script” and “shutdown-script” meta fields. I do not want a script that runs every time. I also do not want to re-invent the wheel writing a failsafe script that will push salt-minion out and reconfigure it. For this reason I came up with a one time startup script.

The Solution

Startup Script

This is the startup script I came up with.

#!/bin/bash

if ! type cloud-init > /dev/null 2>&1 ; then

echo "Ran - `date`" >> /root/startup

sleep 30

yum install -y cloud-init

if [ $? == 0 ]; then

echo "Ran - Success - `date`" >> /root/startup

systemctl enable cloud-init

#systemctl start cloud-init

else

echo "Ran - Fail - `date`" >> /root/startup

fi

# Reboot either way

reboot

fiThis script checks to see if cloud-init exists. If it does, move along and don’t waste cpu. If it does not, we wait 30 seconds and install it. Upon success, we enable and either way we reboot.

Workaround

I played with this for a good part of a day, trying to get it working. Without the wait and other logging logic in the script, the following would happen.

2019-11-14T18:04:37Z DEBUG DNF version: 4.0.9

2019-11-14T18:04:37Z DDEBUG Command: dnf install -y cloud-init

2019-11-14T18:04:37Z DDEBUG Installroot: /

2019-11-14T18:04:37Z DDEBUG Releasever: 8

2019-11-14T18:04:37Z DEBUG cachedir: /var/cache/dnf

2019-11-14T18:04:37Z DDEBUG Base command: install

2019-11-14T18:04:37Z DDEBUG Extra commands: ['install', '-y', 'cloud-init']

2019-11-14T18:04:37Z DEBUG repo: downloading from remote: AppStream

2019-11-14T18:05:05Z DEBUG error: Curl error (7): Couldn't connect to server for http://mirrorlist.centos.org/?release=8&arch=x86_64&repo=AppStream&infra=stock [Failed to connect to mirrorlist.centos.org port 80: Connection timed out] (http://mirrorlist.centos.org/?release=8&arch=x86_64&repo=AppStream&infra=stock).

2019-11-14T18:05:05Z DEBUG Cannot download 'http://mirrorlist.centos.org/?release=8&arch=x86_64&repo=AppStream&infra=stock': Cannot prepare internal mirrorlist: Curl error (7): Couldn't connect to server for http://mirrorlist.centos.org/?release=8&arch=x86_64&repo=AppStream&infra=stock [Failed to connect to mirrorlist.centos.org port 80: Connection timed out].

2019-11-14T18:05:05Z DDEBUG Cleaning up.

2019-11-14T18:05:05Z SUBDEBUG

Traceback (most recent call last):

File "/usr/lib/python3.6/site-packages/dnf/repo.py", line 566, in load

ret = self._repo.load()

File "/usr/lib64/python3.6/site-packages/libdnf/repo.py", line 503, in load

return _repo.Repo_load(self)

RuntimeError: Failed to synchronize cache for repo 'AppStream'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.6/site-packages/dnf/cli/main.py", line 64, in main

return _main(base, args, cli_class, option_parser_class)

File "/usr/lib/python3.6/site-packages/dnf/cli/main.py", line 99, in _main

return cli_run(cli, base)

File "/usr/lib/python3.6/site-packages/dnf/cli/main.py", line 115, in cli_run

cli.run()

File "/usr/lib/python3.6/site-packages/dnf/cli/cli.py", line 1124, in run

self._process_demands()

File "/usr/lib/python3.6/site-packages/dnf/cli/cli.py", line 828, in _process_demands

load_available_repos=self.demands.available_repos)

File "/usr/lib/python3.6/site-packages/dnf/base.py", line 400, in fill_sack

self._add_repo_to_sack(r)

File "/usr/lib/python3.6/site-packages/dnf/base.py", line 135, in _add_repo_to_sack

repo.load()

File "/usr/lib/python3.6/site-packages/dnf/repo.py", line 568, in load

raise dnf.exceptions.RepoError(str(e))

dnf.exceptions.RepoError: Failed to synchronize cache for repo 'AppStream'

2019-11-14T18:05:05Z CRITICAL Error: Failed to synchronize cache for repo 'AppStream'Interestingly it would work on the second boot. I posted on ServerFault about this. – https://serverfault.com/questions/991899/startup-script-centos-8-yum-install-no-network-on-first-boot. I will try to update this article if it goes anywhere as the “sleep 30” is annoying. The first iteration had a sleep 10 and it did not work.

It was strange because I could login and manually run the debug on it and it would succeed.

sudo google_metadata_script_runner --script-type startup --debugCloud-Init

Our goal was to use this right? Cloud-Init has a nice module for installing and configuring Salt – https://cloudinit.readthedocs.io/en/latest/topics/modules.html#salt-minion

#cloud-config

yum_repos:

salt-py3-latest:

baseurl: https://repo.saltstack.com/py3/redhat/$releasever/$basearch/latest

name: SaltStack Latest Release Channel Python 3 for RHEL/Centos $releasever

enabled: true

gpgcheck: true

gpgkey: https://repo.saltstack.com/py3/redhat/$releasever/$basearch/latest/SALTSTACK-GPG-KEY.pub

salt_minion:

pkg_name: 'salt-minion'

service_name: 'salt-minion'

config_dir: '/etc/salt'

conf:

master: salt.example.com

grains:

role:

- webThis sets up the repo for Salt. I prefer their repo over Epel as Epel tends to be dated. It then sets some simple salt-minion configs to get it going!

How do you set this?

You can set this two ways. One is from the command line if you have the SDK.

% gcloud compute instances create test123-17 --machine-type f1-micro --image-project centos-cloud --image-family centos-8 --metadata-from-file user-data=cloud-init.yaml,startup-script=cloud-bootstrap.shOr you can use the console and paste it in plain text.

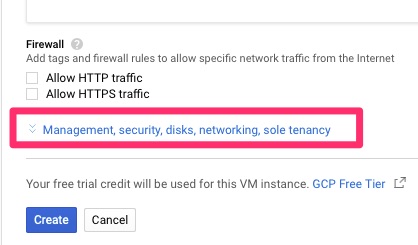

Don’t feel bad if you can’t find these settings. They are buried here.

Final Words

In this article we walked through automating the provisioning. You can use cloud-init for all sorts of things such as ensuring its completely up to date before handing off as well as adding users and keys. For our need, we just wanted to get Salt on there so it could plug into config management.